- TikTok has been found to provide different comments to different groups of people, deliberately provoking confrontation and creating information cocoons.

- Amazon, Ctrip and other platforms use user profiles to adjust prices and increase profit.

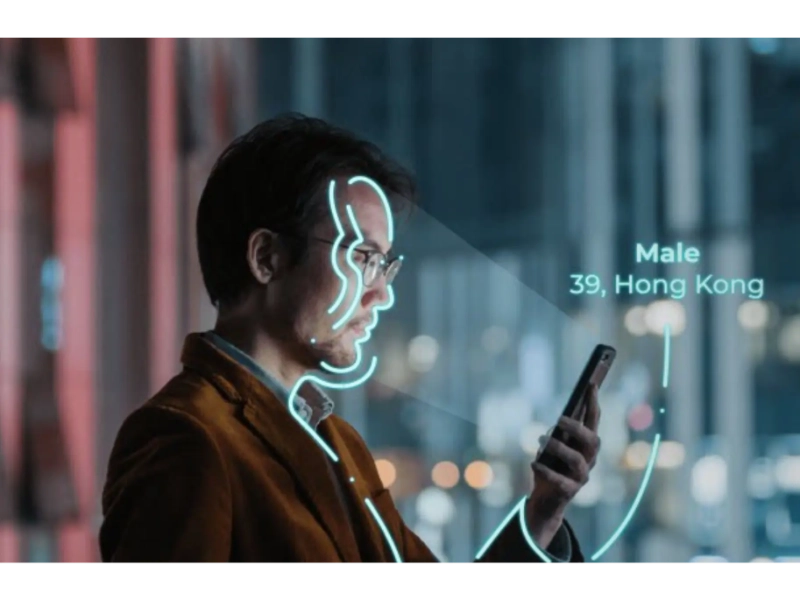

- Increasingly, biometrics are used, recognising expressions, voice and even mouse movements.

In September 2023, a 23-year-old man from ShanXi, China, posted a Tiktok video to share a shocking discovery. In a video of a couple arguing, the comments displayed to him were entirely different to the comments displayed to his girlfriend. Tiktok’s comment section appeared to be deliberately antagonistic. In the girls’ comments section, comments were mostly from a woman’s perspective. Plus, they appeared to be seeing extremely vicious comments, deliberately used to start a fight. Males, on the other hand, saw things very differently

Some Chinese netizens realised: “Of course, no wonder I can’t see different voices in the comments section many times”.

Others expressed deep concern about the scene: “Our vision is getting narrower and narrower, our thinking is getting more and more extreme, and our prejudices are getting more and more serious.”

Also read:TikTok’s drastic change: A second round of share buybacks

The culprit of the information cocoon: personalisation

And the truth is, this kind of tailored content, based on exquisitely detailed profiles the big tech companies create for each user, is expanding, and seeping deeper into various facets of our digital lives.

- Amazon:the first case of personalized price customization

You may default to seeing the exact same product information, at the exact same price, as your friend, colleague, or parent sees when scrolling down the page. But back in 2000, Amazon conducted a differential pricing experiment, tailoring different prices for each of its 68 best-selling DVD items to each customer based on their information and behaviour, which greatly increased the gross margins on those sales. Amazon has projected a price that is uniquely yours based on your gender, age, hobbies, past spending, and other personal information. It’s a mutual comfort zone where you can afford to pay and the platform makes more.

And how they create these profiles is where the real sci-fi stuff of wrecked sleep begins. From the way you move your mouse, to the speed you type, to sounds you make, to the frequency of your blinks, even some micro-expressions, bid data, fed into big tech algorithms, can be collected from a myriad of behaviours you’re probably not even thinking about, when it comes to privacy.

“Together, these methods can look at subtle physical characteristics that are unique to the individual and unconscious (such as how a person walks),” says Ryan Payne, a researcher from the Queensland University of Technology.

More details from Payne,behind all this is Biometric Pricing Technology (BPT), an important emerging application of biometric tracking technology, in support. As of today, most biometric technologies are still at the static pricing stage. This represents pricing that is predetermined and not related to real-time consumer interaction. It takes a period of time to collect enough bio-data from the user and then make adjustments to the price, i.e., through the three phases of “click and wait,” “monitor,” and “adjust after consideration.” The more advanced biometric pricing technology based on facial tracking allows for real-time price adjustments and a truly dynamic pricing stage.

Also read: Amazon Q AI assistant: AWS launches a revolutionary data query approach

- Ctrip: big data kills loyalty

In our normal perception, we always think that loyal users can get cheaper prices. But the reality is that some companies and platforms gain more profit by exploiting customer’s dependency.

The real-life example comes from Ms Hu, who has always booked her flights and hotels through the Ctrip app, and is therefore a Diamond VIP customer on the platform who enjoys a 20% discounted rate.

In July 2020, Ms Hu ordered a hotel at a price of RMB 2,889 through Ctrip APP as usual. However, when leaving the hotel, Ms Hu happened to find that the actual listed price of the hotel was only RMB 1,377.63. Not only did Ms Hu not enjoy the discounts that star-rated customers should enjoy, but she paid double the room rate instead.

- The industry chain behind pregnancy fraud

Some mother and baby software start to use big data algorithms to make precise pushes after users add pregnancy tags. For pregnant mothers, they will push monthly centres, mother and baby supplies and parenting knowledge. For fathers-to-be, they will push erotic videos, and even project a lot of SMS indicating that they can provide special services to lure men to make irrational choices. Some mother and baby software will systematically and actively remind users to invite dads to download and participate in childcare together after downloading and registering, but the purpose behind it may hide a grey industry chain that lures men to cheat during pregnancy.

“It’s a social validation feedback loop … exactly the kind of thing a hacker like me would come up with, because you’re exploiting a vulnerability in human psychology,” said Sean Parker, Facebook’s first president.

“The danger of being in our echo chambers is that it can perpetuate fake news, stunt our views, and as we have also seen affect how people decide to vote during elections.”

Sangeeta Waldron, founder, author and guest lecture at Serendipity PR & Media

Confirmation Bias and Extreme Thinking

The information cocoon will continue to reinforce our existing beliefs and perspectives if individuals only focus on self-selected content or content that they like and reduce their exposure to other information.

We develop the illusion that you and I are receiving the same information and that our online discussions are based on a standard set of facts. But the truth is that each of us has our own Truman Show, and what we see is a world tailored for us by algorithms. This can make people blind, extreme and easily manipulated, and it makes people less and less likely to accept different points of view, even leading to the breeding of prejudice and discrimination, and the tendency to become more and more antagonistic, leading to social unrest..

Jonathan Haidt, a social psychologist at New York University, said: “There has been a dramatic increase in depression and anxiety in the US adolescent population, with a sharp rise in the number of adolescents engaging in self-harm and even suicide, and even a 151 per cent increase in the number of self-harm episodes among girls aged 10-14. This pattern of increase points to social media”.

Sangeeta Waldron, founder, author and guest lecture at Serendipity PR & Media, said that:“The danger of being in our echo chambers is that it can perpetuate fake news, stunt our views, and as we have also seen affect how people decide to vote during elections.”

This is one facet of the information cocoon and algorithmic trap that society as a whole is falling into.

The hand behind the back:

- Biometrics

Payne also provided us with more scenarios where biometrics could be used, including for determining a student’s academic potential, deciding on the ideal military or employee recruit, and personalising movie trailers. Even an individual’s income, sexual orientation, race, marital status, IQ, political affiliation, and their emotions (especially negative ones) will be invisible under this somewhat omnipotent technology.

“Kids in learning could get personalized content, doctors would know what level of language to use, and the emotions of patience they treat, if you could predict IQ you could help people find careers within their abilities – get a higher sense of fulfillment.” Payne made this prediction for the near future.(click here to read more about this)

“However, through social media and the massive growth of CCTV, combined with a lack of ability to own a piece of land, the ability to conduct So we have in a way created a self imposed panopticon prison. Not by profiling, but by the growth of tracking and judging. Not by profiling, but by the growth of tracking and the thirst for user generated content,” says Payne.

“…,with a lack of ability to own a piece of land, the ability to conduct So we have in a way created a self imposed panopticon prison. “

Ryan Payne, Queensland University of Technology

- Feature extraction in sentiment analysis

Sentiment analysis is a way to that automatically extract, classify, and summarize sentiments and emotions from online text. It allows machines to determine public opinions about products, services, policies, and politics, enabling platforms to make personalized recommendations and businesses and governments to monitor public opinion.

Cocooning: the right to determine is in the hands of the user

Users have the right to determine the flow of their information and can actively manage their data through privacy settings and data authorisation. Following the introduction of China’s Regulations on the Administration of Information Services of Mobile Internet Applications, several well-known platforms have deliberately hidden the off button in the corner of their software, even though they have turned on a feature to turn off personalised recommendations. Under the status quo, users need to defend their rights and interests on their own and actively participate in merchant information interactions.

Lindsey Chastain, founder and CEO at The Writing Detective, claimed that “recognising personalised results are biased by the platform’s business incentives – maintain a healthy scepticism.”

“Recognising personalised results are biased by the platform’s business incentives – maintain a healthy scepticism.”

Lindsey Chastain, founder and CEO at The Writing Detective

More than that, users need to take the initiative to get first-hand information, rather than being processed. Only through the wide diversity of information, to develop the ability to identify the authenticity of information, in order to build a more comprehensive and objective cognitive system. When users no longer spend time actively searching for reading and integrating information, but rather passively accept the so-called content of interest stuffed to us by algorithms. We will not be able to break the existing knowledge structure.

Remember, we need to make ourselves the starting point of information reception, not the end point. At least in order not to become the leek of major businesses, we should, more or less, make some resistance to the information cocoon!