- The roots of natural language processing (NLP) trace back to the 1950s with early projects like the Georgetown-IBM experiment, which demonstrated the potential of machine translation.

- Noam Chomsky’s transformational-generative grammar in the 1960s provided a theoretical framework for analysing syntactic structures, significantly influencing early NLP research.

- The advent of deep learning and neural networks in the 2000s, driven by pioneers like Geoffrey Hinton, Yoshua Bengio, and Yann LeCun, revolutionised NLP, leading to breakthroughs with models like transformers.

Natural language processing (NLP) is a fascinating field at the intersection of computer science, artificial intelligence, and linguistics. It involves the development of algorithms and systems that enable computers to understand, interpret, and generate human language. But who exactly invented NLP? The answer isn’t straightforward, as NLP’s development is the result of contributions from numerous researchers and advancements over many decades.

Early foundations: The 1950s and 1960s

The roots of NLP can be traced back to the early days of computer science and artificial intelligence. In the 1950s, researchers began exploring the idea of using computers to process human language. One of the earliest significant projects was the Georgetown-IBM experiment in 1954, where a machine translation system was developed to translate Russian sentences into English. This project demonstrated the potential of NLP and sparked further interest and research in the field.

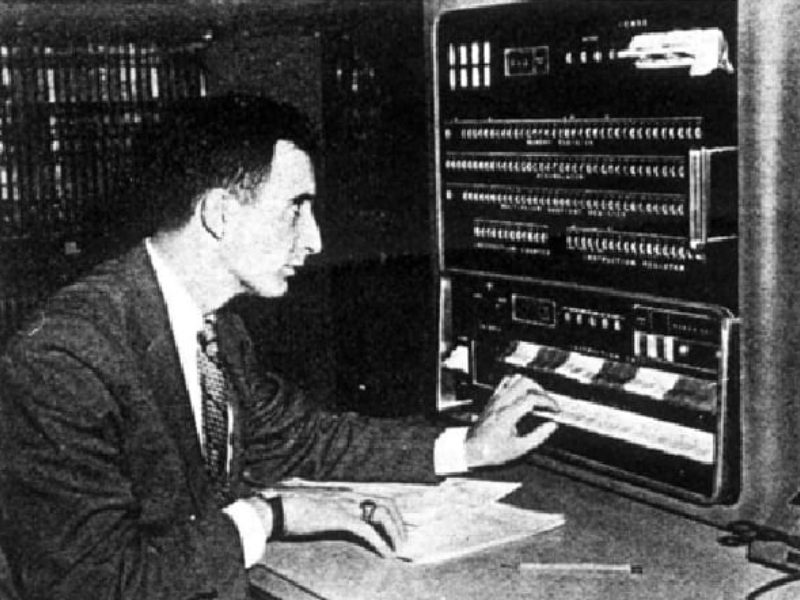

Key figure: Warren Weaver

Warren Weaver, a mathematician and an early pioneer in machine translation, proposed using statistical methods to tackle the problem of language translation. In his influential 1949 memorandum, he suggested that language could be treated as a form of cryptography and that computers could be used to decode it. Weaver’s ideas laid the groundwork for future research in NLP and machine translation.

Also read: How to create a large language model (LLM)?

The rise of formal linguistics: The 1960s and 1970s

The 1960s and 1970s saw the rise of formal linguistics, which significantly influenced the development of NLP. Noam Chomsky, a prominent linguist, introduced transformational-generative grammar, a theory that revolutionised the understanding of syntax and grammar in human language. Chomsky’s work provided a theoretical framework for parsing and analysing sentences, which became a cornerstone of early NLP research.

Key figure: Noam Chomsky

Chomsky’s theories on syntax and grammar were instrumental in shaping the direction of NLP. His transformational-generative grammar model offered a structured way to analyse the syntactic structure of sentences, influencing the development of early NLP algorithms and systems.

The advent of machine learning: The 1980s and 1990s

The 1980s and 1990s marked a significant shift in NLP research with the advent of machine learning techniques. Researchers began using statistical methods and probabilistic models to analyse and generate human language. This era saw the development of key algorithms and models that form the foundation of modern NLP.

Key figure: Frederick Jelinek

Frederick Jelinek, a pioneer in the field of speech recognition, made substantial contributions to the application of statistical methods in NLP. His work at IBM’s Thomas J. Watson Research Center led to the development of hidden Markov models (HMMs) for speech recognition, which were later adapted for various NLP tasks. Jelinek’s famous quote, “Every time I fire a linguist, the performance of the speech recogniser goes up,” highlights the growing importance of statistical approaches in NLP.

The era of deep learning: The 2000s and beyond

The 2000s and beyond have witnessed a revolution in NLP with the advent of deep learning and neural networks. These techniques have dramatically improved the performance of NLP systems, enabling breakthroughs in tasks such as machine translation, sentiment analysis, and text generation.

Key figures: Geoffrey Hinton, Yoshua Bengio, and Yann LeCun

Geoffrey Hinton, Yoshua Bengio, and Yann LeCun, often referred to as the “godfathers of deep learning,” have made groundbreaking contributions to the development of neural networks and deep learning models. Their work has had a profound impact on NLP, particularly in the development of models such as word embeddings, recurrent neural networks (RNNs), and transformers, which have significantly advanced the state-of-the-art in the field.

Also read: Prices of Chinese AI chatbot language models reduce

Modern NLP and the role of transformers

One of the most significant recent advancements in NLP is the development of transformer models, such as BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer). These models have set new benchmarks in various NLP tasks and have been widely adopted in both academia and industry.

Key Ffigures: Ashish Vaswani and the Google Brain team

Ashish Vaswani and his colleagues at Google Brain introduced the transformer model in their seminal 2017 paper “Attention is All You Need.” This model revolutionised NLP by enabling more efficient parallelisation and improving performance across a range of tasks. The transformer architecture has since become the foundation for many state-of-the-art NLP models, including BERT and GPT.

Natural language processing is a field that has evolved through the contributions of many brilliant minds over several decades. From the early days of machine translation and formal linguistics to the modern era of deep learning and transformers, NLP has been shaped by the work of researchers who have pushed the boundaries of what is possible. While it is difficult to attribute the invention of NLP to a single individual, the collective efforts of pioneers like Warren Weaver, Noam Chomsky, Frederick Jelinek, and the deep learning triumvirate of Hinton, Bengio, and LeCun have been instrumental in bringing us to the current state of the art in NLP. As we continue to advance, the contributions of today’s researchers will undoubtedly shape the future of this exciting and dynamic field.