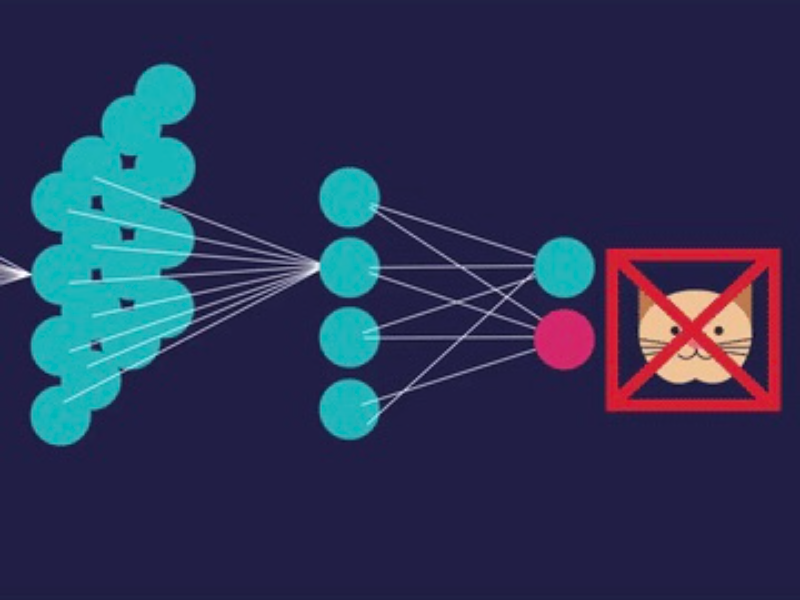

- Hidden layers in neural networks are intermediate layers that process and transform input data to enable the network to learn and make predictions.

- Different types of hidden layers, such as fully connected, convolutional, and recurrent layers, contribute to various aspects of data processing, making neural networks versatile and powerful in tasks like image recognition, sequence prediction, and deep learning.

Hidden layers are crucial components of neural networks that process and transform input data, enabling the network to learn and make predictions. These layers empower neural networks to handle complex tasks across various applications, such as image recognition and sequence prediction.

Definition of hidden layers in neural networks

The hidden layers of neural networks are intermediate layers of neurons (or nodes) that process input data before producing an output. Unlike the input and output layers, which directly interact with external data and provide results, hidden layers are not visible or directly accessible to users.

Their primary function is to analyse and transform the input data through a series of weighted calculations, allowing the neural network to learn patterns, recognise features, and make predictions. The complexity and depth of the neural network increase with the number of hidden layers, enabling more sophisticated data processing and the development of deep learning models.

Also read: Firmware vs. Software: The hidden forces behind your tech

Also read: ‘Emotional clothing’ brings your mood to your wardrobe

Different types of hidden layers in neural networks

1. Fully connected layers: Every neuron in this layer is connected to every neuron in the previous layer. Common in many types of neural networks, especially in the final stages of processing before the output layer. They are used to combine features extracted in earlier layers and make decisions or classifications.

2. Convolutional layers: These layers apply convolutional filters to the input data, detecting patterns such as edges, textures, or other visual features. Predominantly used in Convolutional Neural Networks (CNNs) for tasks involving image or video processing.

3. Pooling layers: Pooling layers reduce the dimensionality of the data, making the network more computationally efficient by summarising regions of the data. Often found in CNNs, they follow convolutional layers to down-sample the data and reduce its complexity.

4. Recurrent layers: Recurrent layers have connections that loop back to the same or previous layers, allowing the network to maintain a “memory” of previous inputs. Used in Recurrent Neural Networks (RNNs) for tasks involving sequences, such as time series prediction or natural language processing.

5. LSTM and GRU layers: Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) layers are specialised types of recurrent layers designed to handle long-term dependencies in sequence data. Employed in advanced RNNs for tasks requiring the capture of long-term contextual information, such as machine translation or speech recognition.

6. Dropout layers: Dropout layers randomly deactivate a portion of the neurons during training to prevent overfitting. Commonly used in various network architectures to improve generalisation.

7. Batch normalisation layers: These layers normalise the output of a previous activation layer, speeding up training and improving performance. Widely used across different neural network architectures to stabilise learning.

8. Generative Adversarial Network (GAN) layers: GANs have two types of layers within their hidden layers: generator layers (which create fake data) and discriminator layers (which attempt to distinguish real data from fake). Used in GAN architectures for generating realistic images, text, or other data types.

Each type of hidden layer is designed to handle specific aspects of data processing, contributing to the overall ability of the neural network to learn and perform tasks effectively.