- The multi-objective optimisation problem is a hot issue in the field of optimisation, which refers to the simultaneous optimisation of multiple conflicting objectives.

- The popular approach to scaling up data processing is to use parallel processing to distribute calculations across multiple processors.

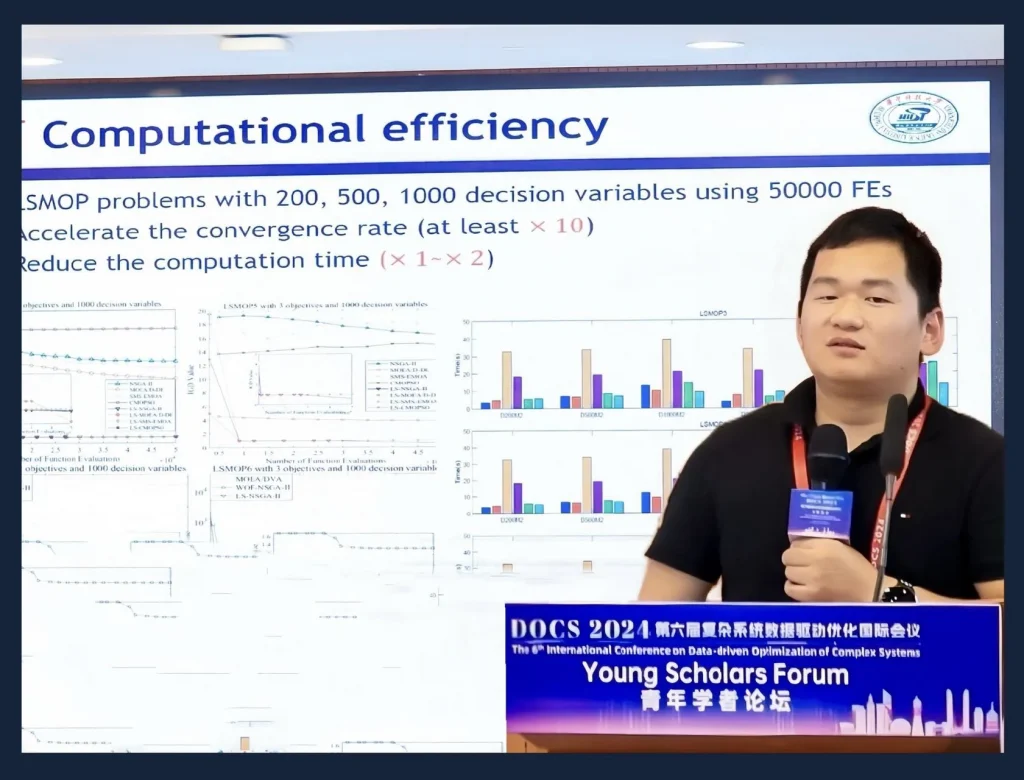

Evolutionary algorithms (EAs) have been a popular optimisation tool for decades, showing promising performance in solving various benchmark optimisation problems. Nevertheless, using EAs on problems with over 100 decision variables (large-scale optimisation problems) remains challenging due to the “curse of dimensionality“, especially for those LSOPs in real-world applications.

Introduction of Dr. Cheng He

Dr. Cheng He is a professor at Huazhong University of Science and Technology, one of China’s leading universities. His research interests are artificial/computational intelligence and its applications, and he has published more than 40 SCI papers. He is an IEEE Senior Fellow and Associate Editor of Complex and Intelligent Systems. He is a board member of PloS One and Electronics, and Chair of the IEEE CIS Intelligence Working Group. Cheng He’s research topic is Competitional Intelligence and Its Application in Power Grid.

Also read: Moral and ethical discussion on artificial intelligence

Also read: Can artificial intelligence achieve consciousness?

Q: In the algorithm, what is multi-objective optimisation?

That’s an interesting problem multi-objective optimisation problem is a topic in the optimisation field it means to optimise multiple conflicting objective simultaneously. let’s take an example, for designing a car, you want to be safe to be cheap but also performance very good. But is it possible? Always it’s not impossible, because you need to balance between the price and the safety and the performance. So multi-objective optimisation is trying to find the best trade off between these three conflicting objectives that’s multi-objective optimisation.

Q: You also mentioned large-scale optimisation in your presentation. What is it?

Large-scale optimisation is a challenging problem in the optimisation field. Let’s take an example, if we want to design a product, usually we have only several decision variables like height, weight, and something several design variables. But consider a problem that it includes over hundreds or even thousands or billions of decision variables that’s a huge surf space you want to design this problem that would be time-consuming and always impossible. That’s light skill optimisation. That’s a challenging problem in the field of optimisation.

Q: In the large-scale optimisation, from thousands to millions or even billion scales. What can we do?

The current popular approach is to use parallel processing to distribute the computation across multiple processors or machines. In addition, implementing distributed computing frameworks, such as Apache Hadoop or Apache Spark, can handle large data sets by distributing data and processing across clusters of computers. Techniques like principal component analysis (PCA) can reduce the number of variables in a data set while retaining most of the variation in the data. At the same time, we can do model building to reduce the complexity of the model by pruning the parts that are not needed, such as the neurons in a neural network.

Q: You recommended the LSMOF model in your report. How does it help to solve practical problems?

LSMOF algorithm is an algorithm designed for accelerating the optimisation process of the large-scale multi-objective optimisation problem and actually its main contribution is trying to accelerate the optimisation problem like we said if an algorithm is used to optimise a problem which takes let’s say hours or days. But if you use this component it can be accelerated to about several minutes. So in real-world applications, you can use my algorithm that LSMOF component as a good local optimal approximation method which can accelerate the design process.

Q: Another thing that really interests me is TREE. What efforts have you made in its research?

We used TREE technology in our online collaboration on voltage transformers in China, where we deployed this method in 29 provinces to monitor more than 20,000 voltage transformers, and it has been documented as one of the most effective ways to monitor this equipment, ensuring the safety of the grid.

Cheng He, professor of Huazhong University of Science and Technology

That’s an interesting question. The TREE problem is a real-world application problem, which is the ratio error estimation of voltage transformers. So let’s say the voltage transformer is a fundamental but crucial device in the power grid is measure the voltage of the power grid for controlling and safety guarantee and many things which are very important. So we need to monitor its health condition but conventionally we need to use human beings to do the manual calibration of this device which means you need to cut off the power and which is dangerous and expensive.

But if we transfer that problem into an optimisation problem which means you just need to use a computer do some calculations and get the health condition of the device which saves the laborhood anything and is safe and which is ensure the safety of the power grid for what we have done.

Significance of research

The research of optimisation algorithms is of great significance to the future development of network. These algorithms make the decision-making process more efficient and are critical to managing the complexity and scale of modern networks. By accelerating the optimisation of design variables and balancing conflicting goals, they pave the way for innovative solutions in network architecture, resource allocation, and business optimisation.