- Deepfakes convincingly fabricate audio, video, or image deceptions, blending deep learning techniques with counterfeit content creation.

- While they have uses in entertainment and customer service, their darker applications include spreading false information and facilitating identity fraud.

- The ongoing arms race against deepfakes drives the development of detection tools like Sentinel and Sensity AI, as well as collaborative initiatives like C2PA.

Deepfakes, powered by AI, convincingly fabricate audio, video, or image deceptions, posing dual concerns: from benign applications in entertainment to sinister uses like spreading misinformation and facilitating identity fraud. To counteract this threat, detection tools like Sentinel and Sensity AI are emerging, alongside initiatives like C2PA.

What is deepfake AI?

Deepfake AI is a form of artificial intelligence utilised to produce convincing images, audio, and video deceptions. The term encompasses both the technology itself and the resultant deceptive content, and is a blend of deep learning and fake.

Deepfakes commonly manipulate existing source material, substituting one person for another. They also generate entirely new content depicting individuals engaging in actions or uttering words they never actually did.

The primary concern surrounding deepfakes is their potential to disseminate false information that appears to originate from reliable sources. For instance, in 2022, a deepfake video emerged featuring Ukrainian president Volodymyr Zelenskyy purportedly urging his troops to surrender.

There are also worries regarding the potential interference in elections and the propagation of election propaganda. “Political consultants, campaigns, candidates and even members of the general public are forging ahead in using the technology without fully understanding how it works or, more importantly, all of the potential harms it can cause,” said Carah Ong Whaley, academic program officer for the UVA Centre for Politics. “I am especially concerned about the use of AI for voter manipulation – not just through deepfakes, but through the ability of generative AI to be microtargeting on steroids through text message and email campaigns,” she said.

Political consultants, campaigns, candidates and even members of the general public are forging ahead in using the technology without fully understanding how it works or, more importantly, all of the potential harms it can cause.

Carah Ong Whaley, academic program officer for the UVA Centre for Politics

Despite the significant risks associated with deepfakes, they also possess legitimate applications, such as in video game audio and entertainment, as well as in customer support and caller response systems, such as call forwarding and receptionist services.

Also read: Is deepfake generative AI?

Applications of deepfake technology

Negative application

Blackmail and reputation harm: Instances of this occur when a target image is placed in an unlawful, unsuitable, or otherwise compromising scenario, such as deceiving the public, participating in explicit sexual activities, or consuming drugs. These videos are employed to coerce a victim, tarnish an individual’s reputation, seek retaliation, or engage in cyberbullying. The prevalent form of blackmail or revenge is nonconsensual deepfake pornography, commonly referred to as revenge porn. In 2019, a software called DeepNude was developed capable of rendering a woman nude with a single click, and it quickly spread virally for malicious intent, particularly to harass women.

False evidence: Fabricated deepfake images or audio can be presented as evidence in legal proceedings, falsely implicating individuals or exonerating them from wrongdoing.

Fraud: Deepfakes are employed to impersonate individuals, often for the purpose of obtaining sensitive personal information such as banking details or credit card numbers. This impersonation can extend to high-ranking company executives or employees with access to confidential data, posing significant cybersecurity threats.

And according to IEEE Spectrum, “Identity fraud was the top worry regarding deepfakes for more than three-quarters of respondents to a cybersecurity industry poll by the biometric firm iProov.”

Misinformation and political manipulation: Deepfake videos of politicians or trusted figures are used to manipulate public opinion and sow confusion, often contributing to the dissemination of fake news. Almost all world leaders, including Barack Obama, former president of the USA, Donald Trump, the running president of the USA, Nancy Pelosi, USA-based politician, Angela Merkel, German chancellor, have all been exploited by fake videos somehow, and even Facebook founder Mark Zuckerberg has faced a similar occurrence. Instances such as the deepfake video involving Ukrainian President Volodomyr Zelenskyy exemplify the potential for deepfakes to exacerbate conflicts and destabilise situations.

Stock manipulation: Forged deepfake content can influence stock prices, with fake videos featuring executives making damaging statements about their companies leading to stock depreciation. Conversely, fabricated videos promoting technological breakthroughs or product launches can artificially inflate stock values.

Pop quiz

How do deepfake videos of politicians or trusted figures contribute to misinformation?

A. By providing accurate information to the public

B. By manipulating public opinion and sowing confusion

C. By exposing political corruption

D. By promoting transparency in government

The correct answer is at the bottom of the article.

Positive application

Art: Deepfakes are utilised to generate new music compositions using existing recordings of artists’ work, enabling innovative approaches to musical creation and remixing. And deepfake technology has democratised the creation of artwork, making it accessible to a wider range of individuals. It enables artists to produce innovative pieces, captivating audiences with unique experiences. For example, the Dalí Museum in St. Petersburg, Florida, utilised Deepfake technology to bring Salvador Dalí to life, allowing visitors to interact with the renowned artist through artificial intelligence.

Digital marketing: Deepfake technology is increasingly employed in digital marketing strategies to create engaging and immersive content. By leveraging Deepfakes, marketers can develop highly personalised advertisements and promotional materials tailored to individual preferences and demographics. For instance, Deepfakes can be used to superimpose product images onto real-life scenarios, allowing consumers to visualise the benefits of a product in their own environment. Furthermore, Deepfake technology enables the creation of compelling storytelling campaigns, enhancing brand engagement and driving customer loyalty. Additionally, Deepfakes offer marketers the ability to repurpose existing content in innovative ways, maximising the return on investment for advertising campaigns.

“This technology is increasingly applied in digital marketing, enabling the company to reduce its costs, design promotional campaigns more easily, personalise its offer, enable unique experiences for its consumers, but also raise the awareness of the target market about certain sensitive issues of social importance,” said Radoslav Baltezarevic, Vice-Dean for Graduate Studies and Scientific Research, Professor in Marketing, Communication & Management, Megatrend University.

This technology is increasingly applied in digital marketing, enabling the company to reduce its costs, design promotional campaigns more easily, personalise its offer, enable unique experiences for its consumers, but also raise the awareness of the target market about certain sensitive issues of social importance.

Radoslav Baltezarevic, Vice-Dean for Graduate Studies and Scientific Research

Caller response services: These services leverage deepfakes to provide personalised responses to caller inquiries, enhancing customer engagement and satisfaction, particularly in call forwarding and receptionist tasks.

Customer phone support: Utilising synthetic voices generated by deepfake technology, customer support services streamline routine tasks such as checking account balances or lodging complaints, improving efficiency and user experience.

Entertainment: The entertainment industry harnesses deepfakes for various purposes, including cloning and manipulating actors’ voices for scenes in movies and video games. This approach proves invaluable when logistical constraints make traditional filming challenging or when actors are unavailable for voice recording during post-production. Additionally, deepfakes contribute to satire and parody content, offering audiences humorous insights and creative interpretations of familiar figures. An illustrative example is the 2023 deepfake featuring Dwayne “The Rock” Johnson as Dora the Explorer, showcasing the potential for playful experimentation with deepfake technology.

Also read: Is deepfake AI illegal?

Deepfake detector tools & techniques

A deepfake detection tool is a software or system designed to identify and detect deepfake videos or images. It typically utilises various methods to analyse digital content and determine whether it has been manipulated or generated by AI.

With the growing number of deepfakes, deepfake detection software is becoming increasingly popular to protect against the harmful effects of fake videos and audios. The global deepfake detection software market is estimated to exhibit a CAGR of 38.3% from 2024 to 2029. And the fake image detection market size is projected to grow from USD 0.6 billion in 2024 to USD 3.9 billion by 2029 at a Compound Annual Growth Rate (CAGR) of 41.6% during the forecast period.

Recently, OpenAI has introduced a tool to detect images created by its AI generator, DALL-E, as experts warn of AI-generated deepfakes influencing elections. The detector, effective for DALL-E images but not others, will be tested by disinformation researchers. OpenAI also works on AI content watermarking and joins efforts like C2PA for digital content authenticity.

Before OpenAI introduced its deepfake detector, there are already some well-performing detectors available.

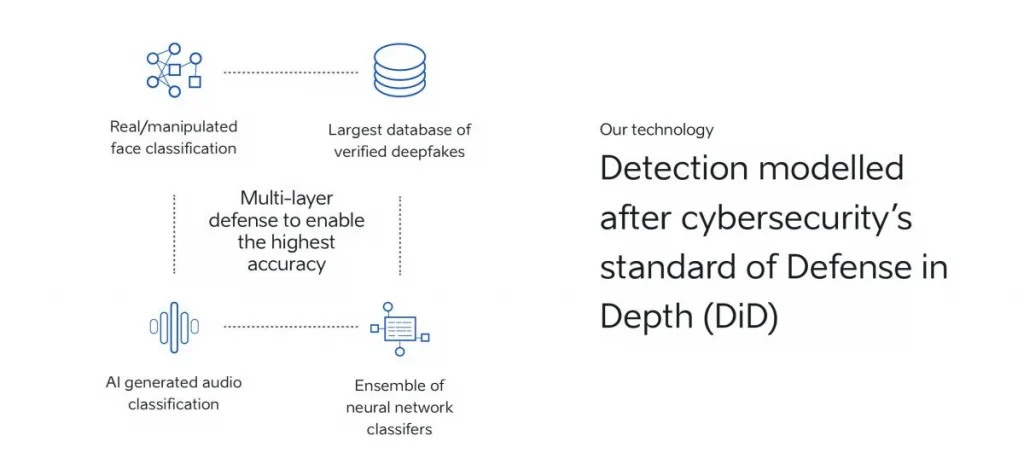

Sentinel: Sentinel is an AI-based protection platform that helps democratic governments, defense agencies, and enterprises stop the threat of deepfakes. Esteemed organisations across Europe rely on Sentinel’s technology for fortification. The mechanism operates by permitting users to submit digital content via their website or API, thereafter subjecting it to automatic scrutiny for AI tampering. The system discerns the authenticity of the content and furnishes a graphical representation of any alterations made.

Deepware: Deepware is a user-friendly tool designed to detect deepfake videos. Using advanced machine learning algorithms, it scans video content for signs of manipulation, such as unnatural facial movements and inconsistencies in lighting and shadows. It provides a probability score indicating the likelihood of a video being a deepfake, helping users quickly assess its authenticity.

Sensity: Sensity offers a comprehensive platform for detecting deepfakes in real-time. It employs a combination of computer vision and deep learning techniques to analyse video frames and audio signals. Sensity’s technology is utilised by governments and media organisations to protect against the dissemination of fake news and malicious content.

Microsoft’s Video Authenticator Tool: Microsoft’s Video Authenticator Tool stands as a potent resource capable of scrutinising both still imagery and video content, furnishing a confidence rating indicative of potential manipulation. It adeptly identifies the blending boundaries inherent in deepfakes, as well as subtle grayscale nuances imperceptible to the human eye. Moreover, it furnishes this confidence rating instantaneously, facilitating swift identification of deepfakes.

Intel’s FakeCatcher: With an impressive accuracy rate of 96%, FakeCatcher delivers results within milliseconds. Developed in collaboration with Umur Ciftci from the State University of New York at Binghamton, FakeCatcher utilises Intel hardware and software, operating on a server and accessible through a web-based interface. FakeCatcher employs a novel approach by scrutinising genuine videos for authentic cues that define human behaviour. It focuses on subtle indicators such as the “blood flow” evident in the pixels of a video. As blood circulates, veins undergo colour changes, and these nuanced signals are gathered from various facial regions. Sophisticated algorithms then translate these signals into spatiotemporal maps. Through deep learning techniques, FakeCatcher swiftly determines the authenticity of a video, distinguishing between genuine and fabricated content.

The correct answer is B, by manipulating public opinion and sowing confusion.