- Microsoft CEO Satya Nadella stressed the urgency of acting quickly after fake images of pop star Taylor Swift emerged online.

- Given how easily AI generators can be manipulated, the report highlights the inherent challenges of enforcing such restrictions.

- There are concerns about the proliferation of AI tools that help create fake photos and highlight the need for comprehensive solutions to new challenges in the digital realm.

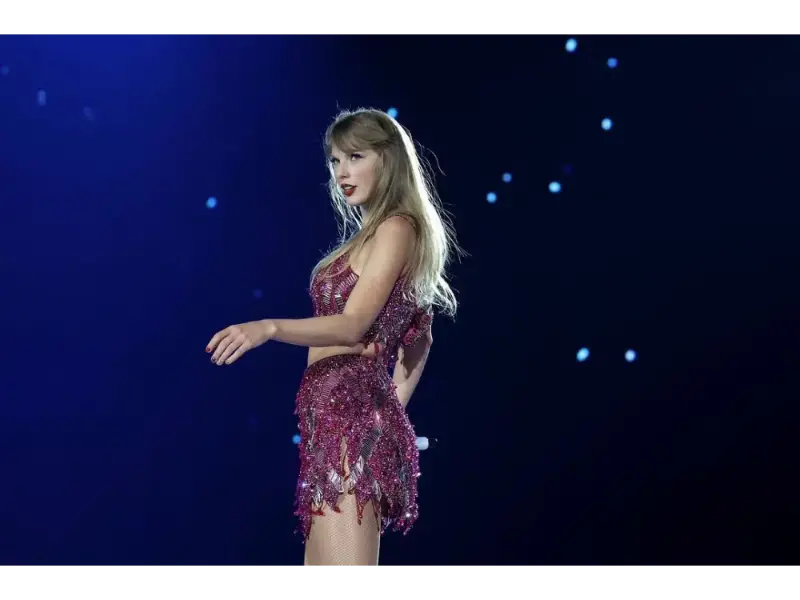

The debate about AI and ‘reality infringements’ has leapt into the world of a famous celebrity – Taylor Swift, who was recently the subject of deepfake imagery shared online. The issue has brought home one of the most discussed fears around generative AI – are the creators and companies involved doing enough to safeguard against its misuse?

An honest response

Microsoft CEO Satya Nadella has responded to concerns about the emergence of explicit AI images of Taylor Swift. In an interview with NBC Nightly News, Nadella called the surge in simulated nudity without consent “shocking and scary,” underscoring the urgency to act quickly.

In an interview scheduled to air next Tuesday, Nadella acknowledged the gravity of the situation when interviewer Lester Holt highlighted the fact that the Internet is awash with fake pornographic depictions of Taylor Swift. Nadella’s answer touched on the complexity of tech policy issues, emphasizing the need for strong safeguards to mitigate such incidents.

I would say two things: One, is again I go back to what I think’s our responsibility, which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced. And there’s a lot to be done and a lot being done there. But it is about global, societal — you know, I’ll say, convergence on certain norms. And we can do — especially when you have law and law enforcement and tech platforms that can come together — I think we can govern a lot more than we think— we give ourselves credit for.

Satya Nadella, Microsoft CEO

Nadella stressed that Microsoft is committed to taking the necessary steps to ensure safer content creation in the technology space. He stressed the importance of establishing global social norms, working with law enforcement, and using technology platforms to effectively police online content.

While there are indications that Microsoft was involved in the dissemination of these fake Swift images, a report by 404 Media suggests that the images came from a telegraph-based community involved in the creation of non-consensual pornography. This community is said to have suggested using Microsoft’s Designer image generator, even though it is thought to prohibit generating images of public figures. However, given the ease with which AI generators can be manipulated, the report highlights the inherent challenges of enforcing such restrictions.

While these developments highlight technical flaws that Microsoft may need to address, they also reflect broader concerns about the proliferation of artificial intelligence tools that help create fake nude photos. This trend has sparked heated debate and highlighted the need for comprehensive solutions to address new challenges in the digital realm.

Small community, big power

Some said Microsoft may have something to do with the fake Swift images. 404 Media reports indicate that the images are from a telegram based non-consensual porn production community that recommends using Microsoft Designer image generator. In theory, designers refuse to generate images of celebrities, but AI generators are easy to fool, and 404 has found that you can break its rules by fine-tuning the prompts. While this doesn’t prove that the designer was used to make Swift images, it’s a technical flaw that Microsoft can fix. But AI tools have greatly simplified the process of creating a real human fake nude, creating chaos for women who are far less powerful and famous than Swift. Controlling their production is not as simple as getting big companies to reinforce guardrails. Even if major “big Tech” platforms like Microsoft are blocked, one can retrain open tools like Stable Diffusion to make NSFW pictures, despite attempts to make this more difficult. Far fewer users may have access to these generators, but the Swift incident shows how widely the work of a small community can spread.

Nadella is vaguely proposing larger social and political changes, yet despite some initial moves to regulate AI, Microsoft doesn’t have a clear solution. Lawmakers and law enforcement are struggling with how to deal with non-consensual sexual imagery, and artificial intelligence fakes add an additional complication. Some lawmakers are trying to amend right-to-publicity laws to address the problem, but the proposed solutions often pose serious risks to speech. The White House has called for “legislative action” on the issue, but even it has provided precious little detail on what that means.

There are other expedients, though – such as social networks limiting the reach of non-consensual images, or, apparently, Swift imposing vigilante justice on those who distribute them. For now, though, Nadella’s only clear plan is to clean up Microsoft’s own artificial intelligence division.