- An interview with Steve Jobs hit the airwaves recently, or at least, a reincarnation of his voice did, showing how AI dubbing has advanced.

- Ai dubbing can reduce risk, cost and improve productivity for companies, but it also raises ethical and regulatory controversies and challenges.

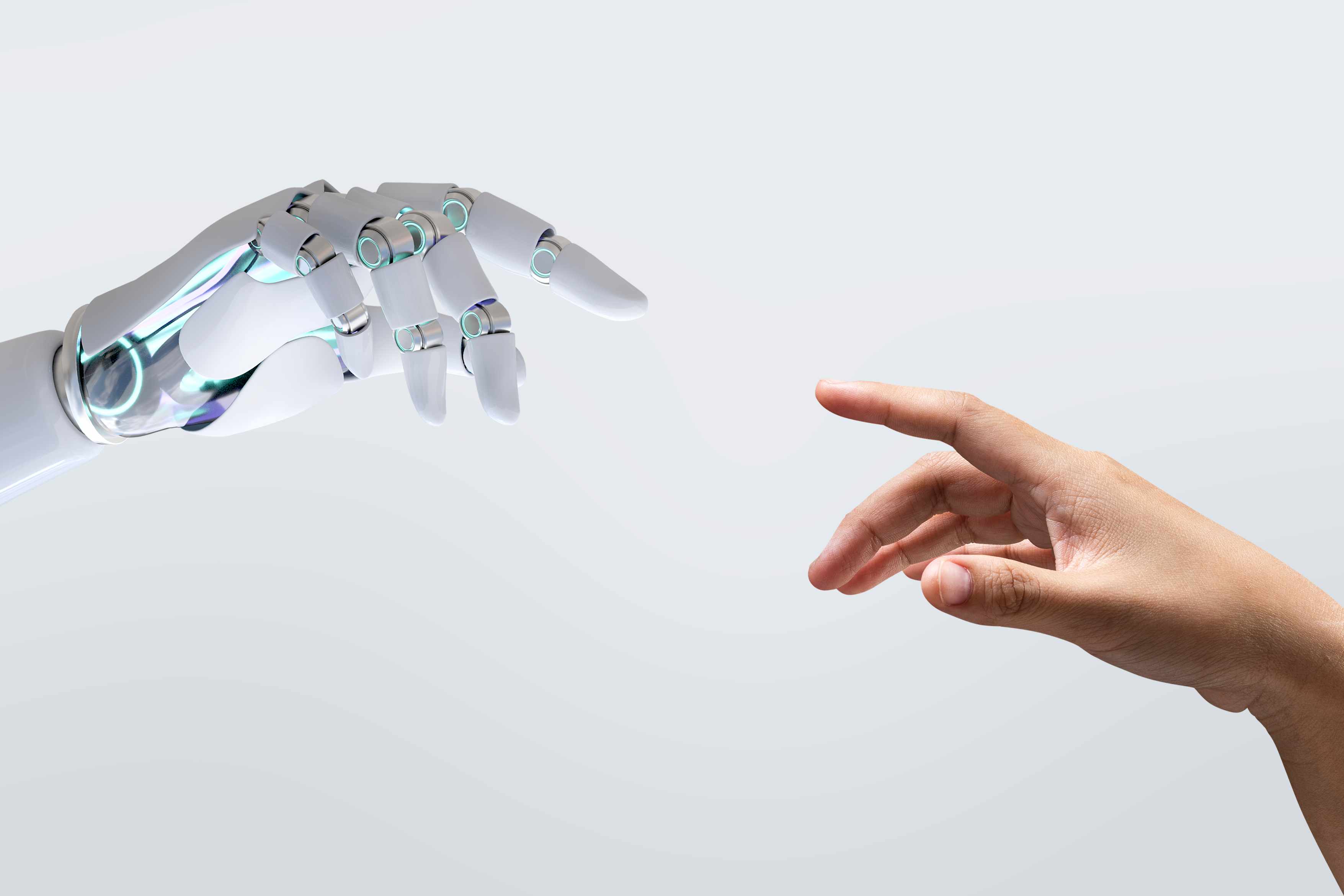

- The future of dubbing needs to balance the technology with the essence of human performance.

The intersection of AI and traditional practices in the entertainment industry has sparked debates about the future of dubbing. However, concerns persist about the buyout system of dubbing and AI’s ability to convey human tone and emotions.

AI dubbing has entered the market

Around the world, it’s undeniable that AI dubbing has started to make waves the market with many companies even running a decent profit. Some of the top providers in the industry include PlayHT, Captions and Rask AI.

PlayHT

This year, the first episode of a podcast called Podcast.ai featured the podcast host discussing his college days, his views on computers, his work status, and his beliefs, among other things, with Steve Jobs, the founder of Apple Group.

The podcast, which brings Jobs back from the dead, is very similar to Jobs’ voice and intonation, according to PlayHT, who are working on a sound cloning technology that will enable individuals and businesses to create audio content at scale. On November 23, they released a voice AI model called On-Premise. They claim that this is the fastest latency, high security, and unlimited availability of speech generation tools available in any model today.

Mahmoud Felfel, founder of PlayHT said: “We built PlayHT as a platform for generative speech and voice cloning. We started by building the most sophisticated speech editor to help our customers have full control on their generated voices. We then invested into building the first Large Language Model for Speech Synthesis and Voice Cloning and achieved SOTA results in voice quality and expressiveness.”

Captions

Captions, a NYC-based video startup, offers services like text captioning, editing, and special effects for social media content creators. It expanded into translation services in 2022 and introduced AI Dubbing in 2023. With innovative features like AI Eye Contact and automatic AI-generated subtitles, Captions has over 100,000 daily users and five million creators. Despite high AI training costs, the company is profitable and has raised $40 million in funding. Its latest innovation, Lipdub, has been adopted by major entities like ESPN and Twitch founder Justin Kan.

Rask AI

Rask AI, an AI-powered video and audio localization tool, translates content into over 130 languages and offers a voice cloning feature. Launched on March 20th, 2023, and winning Product of the Day on Product Hunt in early April, it now has over 750,000 users worldwide.Key projects include the dubbing of the French film ‘THE LEGEND OF AKAM’ into Portuguese for release in Brazil. In addition, PodcastOne is using Rask AI to translate its library of podcasts into Spanish, starting with Barbara Schroeder’s debut podcast ‘Bad Bad Thing’.

Through processes such as translation, cultural adaptation, voiceover or dubbing, Rask AI can greatly simplify the process of localising video content, helping companies and creators to produce localized videos efficiently and cost-effectively.

Also read:Bbkhaz to Zulu: Amazon Transcribe can now recognize 100 languages

Currently utilised technology

Indeed, significant progress has been made in the application of AI in the field of dubbing, which is currently focused on two main technologies. Almost all companies’ AI voice models are built on these two basic technologies R&D.

One is Voice Conversion (VC) technology which enables AI to convert text into audio by adjusting timbre, pitch, language and other attributes whilst maintaining the original content, however, it cannot accommodate for multi-person interactions or emotional expressions. This technology is similar to reading aloud and is appropriate for scenarios requiring solely a change in voice characteristics whilst retaining the original content.

Secondly, Text to Speech (TTS) technology is capable of converting written text into interactive speech. In recent years, TTS technology has been able to showcase emotional expressions, making AI dubbing more “humane,” and no longer giving off a cold mechanical sense.

Implications for traditional voice-over practices.

1. Improve efficiency and reduce costs

“By leveraging Rask AI, businesses can accelerate their localisation efforts, reach a wider audience, and enhance their brand recognition in global markets.”

Maria Chmir, Rask AI CEO & founder

The integration of AI in dubbing enhances work efficiency and reduces production costs by quickly analysing voiceprints for accurate and contextually appropriate lines. This allows for faster dubbing and the creation of multiple language versions, leading to a paradigm shift in production companies’ approach to dubbing projects.

Rikki Lee Travolta, a talented actor, said: “The biggest advantage of AI in voiceover is cost. A union voiceover actor is going to cost an hourly rate. Add on the cost of engineers and studio rental on top of that. With AI, you eliminate most or all of those costs.”

Maria Chmir, Rask AI CEO & founder, also claims that AI is a really handy tool for content or businesses going overseas. “By leveraging Rask AI, businesses can accelerate their localisation efforts, reach a wider audience, and enhance their brand recognition in global markets.”

2. Risk reduction

AI dubbing can mitigate risks for large enterprises, as demonstrated by Mihoyo’s game “Tears of Themis”. When a voice actor was involved in a dispute, Mihoyo used deep synthesis to learn and replicate the actor’s voice from previous recordings, allowing for automatic dubbing. This solution preserved the gaming experience without needing to replace the actor or leave the character voiceless.

3. Promoting overseas dissemination

The lack of synchronization between lip movements and voice in dubbed content is a major drawback, potentially contributing to its unpopularity in English-speaking countries. AI can be used to modify a character’s lip movements, making localized content more authentic and appealing to viewers. Chmir said in the interview: “By leveraging Rask AI, businesses can accelerate their localisation efforts, reach a wider audience and enhance their brand recognition in global markets.”

4. Panic over AI replacing humans

“Now we are just as scared as we were when COVID-19 came; we don’t know what’s going to happen.”

Daniel Hamvas, voice actor

The application of artificial intelligence in voiceover is not limited to a single industry, companies are exploring the possibility of using AI to synthesis different lines. Traditionally, film, TV and game companies select suitable voice actors months in advance, provide the text and record offline. Mature voice actors are paid based on the number of words and time spent recording. However, the advent of artificial intelligence has brought about a new dynamic. Some companies tend to record voice actors’ voices and then use AI to synthesis additional lines, while others even try to buy out voice actors’ voices in a one-off deal to create a voice ip that is uniquely the company’s.

This raises questions about the future of the voiceover industry.Renowned voice actor Daniel Hamvas has voiced many characters in Hungarian dubbing content over the years and is now the leader of the Hungarian dubbing workers’ union. He is at the forefront of the battle, fiercely opposing the use of ai dubbing to protect professionals whose livelihoods are threatened by automation. Hamvas voiced their concerns, saying, “Now we are just as scared as we were when COVID-19 came; we don’t know what’s going to happen.”

Challenges and controversies

“No number of algorithms can create the imperfections that make a human performance perfect.AI can do a good imitation, but an Elvis impersonator will never be Elvis. ”

Rikki Lee Travolta, a talented actor

Despite advances in AI, it may struggle to capture the depth and authenticity that human actors bring to their performances. The risk of dubbing losing the human touch raises concerns about audience engagement and the overall viewing experience.

Phil Siegel, founder of AI nonprofit organization CAPTRS, asserted that : “The models are able to identify the voice’s characteristic tones; it can do it with very little data but if you fed it several sentences of a voice it would probably be able to produce a voice most people couldn’t distinguish from the real person.”

Travolta also emphasis that ai can’t replace humans after all.“And I expect that ai will continue to advance. But it will never be human. No number of algorithms can create the imperfections that make a human performance perfect.AI can do a good imitation, but an Elvis impersonator will never be Elvis. ”said by Travolta.

Sound copyright protection

“The most important regulations besides the legal and ethical issues above is There is building consensus that AI generated content needs to be identified as such. Both what tools were used and what ” feedstock” inputs. “

Phil Siegel, founder of AI nonprofit organization CAPTRS

How ai dubbing addresses potential ethical and legal issues in modeling remains a puzzle. Most companies currently ensure compliance and security of voice capture. Machines can only reproduce text that has been read by people in person, which also requires authorization from the person themselves.Siegel also highlights the importance of watermarking: “The most important regulations besides the legal and ethical issues above is There is building consensus that AI generated content needs to be identified as such. Both what tools were used and what ” feedstock” inputs. So dubbing Taylor Swift’s voice with Speechify would have a specific identifier watermark. “

Unfortunately, so far there is a gap in legal protection in the area of ai sound copyright. How to define sound infringement is likewise very vague. Some sound practitioners are now aware of the value of sound, but are ordinary people using tired software aware of the risks behind sound licensing.

“Because most of the current legislation is actually based on irrational fear, and we’re an industry that needs to regulate ourselves first and foremost. ”

Maria Chmir, Rask AI CEO & founder

As a developer of AI dubbing products, Chmir also expressed its attitude: “We are committed to working with media companies, governments, and AI research institutions to raise awareness and establish ethical standards around the authenticity of content in AI.” So we’re at the very beginning, and it’s really important to be open about what’s going on. Because most of the current legislation is actually based on irrational fear, and we’re an industry that needs to regulate ourselves first and foremost. Our products make AI technology accessible to creators whilst limiting the potential for irresponsible use.“

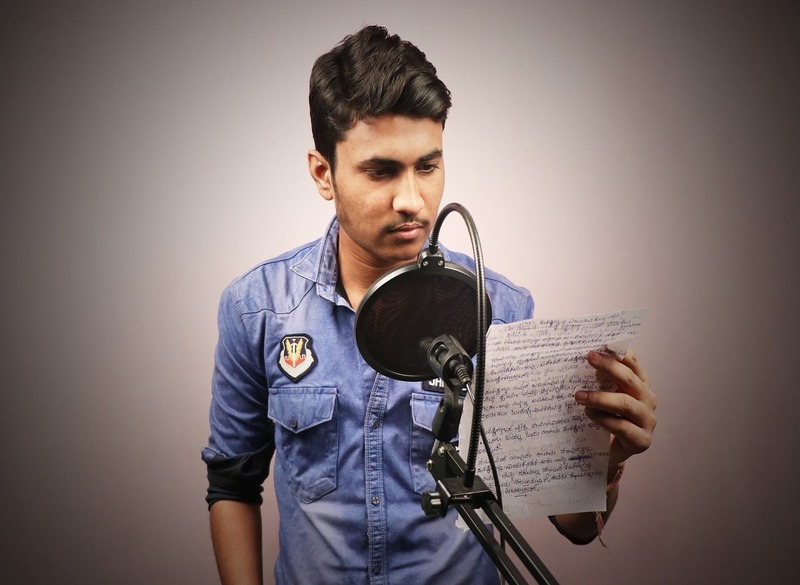

Human factors in dubbing

Voiceover is more than just a technical process. It’s an art form that relies on an actor’s ability to effectively convey emotion and nuance. Human actors bring a unique depth of experience and cultural understanding to their performances, allowing them to adapt to the nuances of different characters and scenes. While AI can mimic human speech patterns, the question remains whether it can truly replicate the emotional depth and connection that a human actor establishes with an audience.

The future of voiceover: finding the balance

As the industry integrates AI into voiceover practices, finding a balance between technological innovation and preserving the essence of human performance becomes critical. Collaboration between AI and human voice actors may provide a middle ground where the efficiency of AI can complement the nuanced performances of human actors. This hybrid approach would not only speed up the dubbing process, but also ensure that emotional resonance and cultural differences are not sacrificed in the pursuit of efficiency.

“Certain experts believe AI dubbing could replace everyone in the industry, although it’s far from the reality. It’s more accurate to call the current stage as co-creation,” also said by Chmir.