- Ego-Exo4D, developed by Meta and partners, is a pioneering dataset integrating first-person and external camera views for AI research.

- The dataset includes over 1,400 hours of video from 800 skilled participants, offering a diverse range of human skills.

- It’s designed to advance AI in video learning, augmented reality, and robot learning, with a public benchmark challenge planned for 2024.

Meta’s Fundamental Artificial Intelligence Research (FAIR) team, along with Project Aria and 15 university partners, has launched Ego-Exo4D. This innovative dataset and benchmark suite are poised to revolutionise AI’s understanding of human skills through video learning and multimodal perception.

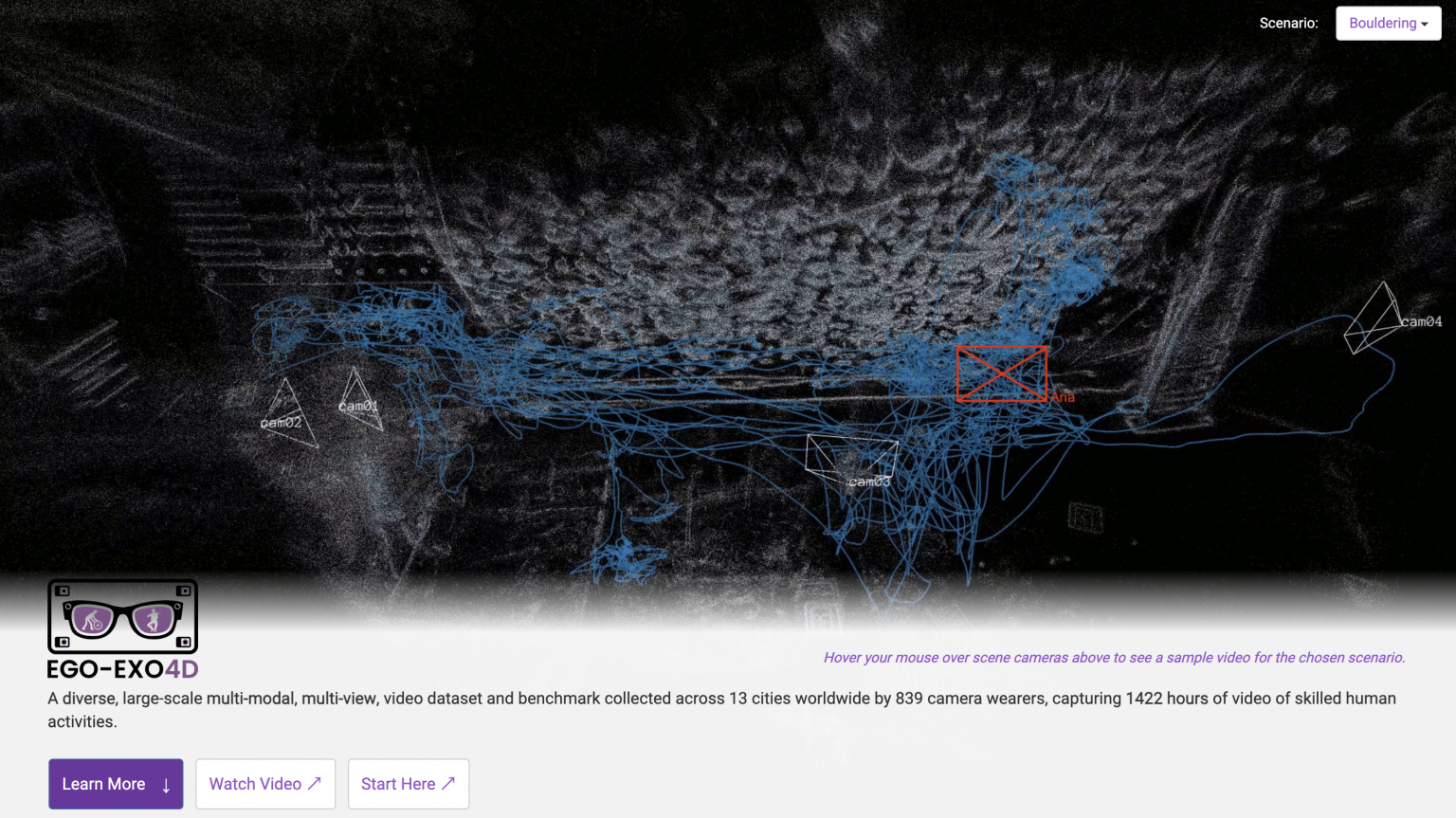

Ego-Exo4D uniquely integrates first-person “egocentric” views from wearable cameras with multiple “exocentric” views from surrounding cameras. This combination provides a holistic understanding of both the participant’s perspective and their surrounding environment.

Global collaboration and data collection

The development of Ego-Exo4D involved a consortium of over 800 skilled participants from six countries, contributing to over 1,400 hours of video. This dataset, set to be open-sourced in December, includes annotations for novel benchmark tasks and is detailed further in a technical paper.

Ego-Exo4D is focused on skilled activities like sports, music, cooking, and more. Its applications range from augmented reality systems and robot learning to social networks, where it can enhance skill learning and understanding.

By integrating first-person and third-person perspectives in a comprehensive video dataset, it opens up new avenues for understanding and interpreting human skills and behaviors. This innovation not only broadens the scope of research in AI but also promises practical applications in augmented reality, robotics, and beyond. The collaborative effort, involving a diverse range of skilled participants and a variety of real-world scenarios, ensures a rich and varied dataset that could lead to more nuanced and context-aware AI systems. This development is a testament to the progressive strides being made in technology, offering exciting prospects for future research and real-world applications.

Also read: Meta’s standalone AI image generator: Meaningful for human creativity

Dataset characteristics and resources

As the largest public dataset of synchronized first- and third-person videos, Ego-Exo4D features diverse experts like athletes, dancers, and chefs. It’s not just multiview but also multimodal, captured with advanced technology including Meta’s Aria glasses, offering comprehensive data like audio, inertial measurements, and wide-angle camera captures.

The dataset includes rich video-language resources like narrations, descriptions, and expert commentary. These resources are time-stamped against the video, providing AI models with detailed insights into skilled human activities.

Meta proposes four foundational tasks for ego-exo video research and provides extensive annotations, the result of over 200,000 hours of annotator effort. A public benchmark challenge is planned for 2024 to foster research in this emerging field.

The Ego-Exo4D consortium represents a global collaboration, encompassing diverse AI talents and geographical contexts. This project marks a significant deployment of Aria glasses in the academic research community.

Also read: Meta Ray-Ban glasses: Do they infringe on user privacy?

The introduction of Meta’s Ego-Exo4D dataset represents a significant stride in the field of AI and machine learning.

The introduction of Meta’s Ego-Exo4D dataset represents a significant stride in the field of AI and machine learning. By integrating first-person and third-person perspectives in a comprehensive video dataset, it opens up new avenues for understanding and interpreting human skills and behaviors. This innovation not only broadens the scope of research in AI but also promises practical applications in augmented reality, robotics, and beyond. The collaborative effort, involving a diverse range of skilled participants and a variety of real-world scenarios, ensures a rich and varied dataset that could lead to more nuanced and context-aware AI systems. This development is a testament to the progressive strides being made in technology, offering exciting prospects for future research and real-world applications.

With Ego-Exo4D, Meta and its partners aim to accelerate research in AI video learning. The potential applications are vast, ranging from augmented reality learning experiences to robots learning from human expertise. Ego-Exo4D is a significant step toward this future, sparking excitement in the research community for the possibilities it opens.