- MLOps focuses on improving collaboration between data scientists, ML engineers, and IT operations teams to ensure that machine learning models are developed, deployed, and maintained efficiently and effectively.

- As machine learning continues to evolve, MLOps provides essential tools and practices for managing complex ML workflows, ensuring that models deliver value and meet business needs effectively.

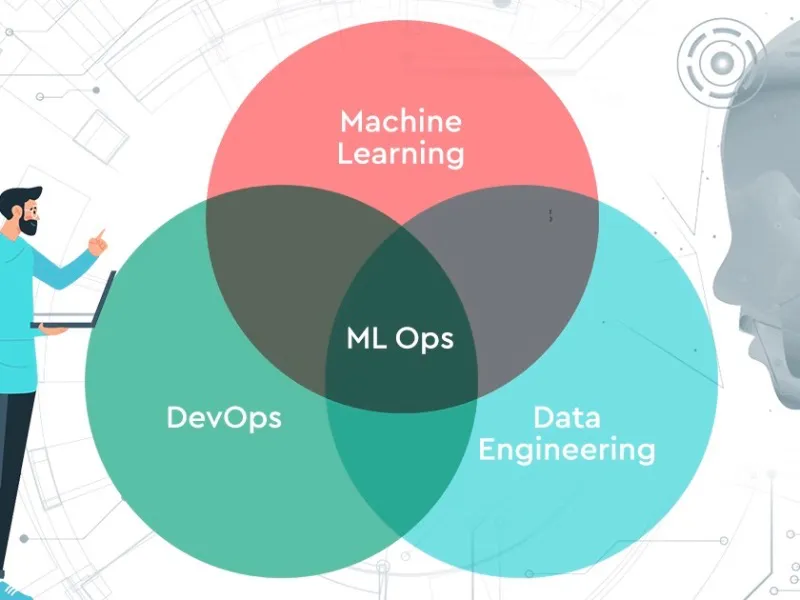

MLOps, short for Machine Learning Operations, is a set of practices and tools designed to manage and streamline the lifecycle of machine learning (ML) models. Similar to DevOps in software engineering, MLOps focuses on improving collaboration between data scientists, ML engineers, and IT operations teams to ensure that machine learning models are developed, deployed, and maintained efficiently and effectively.

What is MLOps?

MLOps is an approach to managing the machine learning lifecycle with a focus on automating and optimising processes from model development through deployment and monitoring. It integrates best practices from DevOps with ML-specific needs, aiming to enhance the reliability, scalability, and performance of machine learning systems.

Also read: Amazon to invest $11bn in Indiana data centres

Also read: What is retail colocation? A guide to shared data services

Model development and experimentation

MLOps facilitates efficient development and experimentation by providing tools and frameworks that support versioning, reproducibility, and collaboration. This involves managing datasets, tracking experiments, and ensuring that model development processes are streamlined. Data science teams at a company like Uber use MLOps platforms to manage experiments, track changes in models and datasets, and collaborate on developing new algorithms for ride-sharing optimisation. Efficient model development ensures that data scientists can experiment and iterate quickly, leading to more effective and innovative machine learning solutions.

Continuous Integration and Continuous Delivery (CI/CD) for ML

MLOps incorporates CI/CD practices tailored for machine learning, including the automation of model training, validation, and deployment. This helps in maintaining a consistent and automated pipeline for deploying machine learning models. A tech giant like Google uses CI/CD pipelines to automate the process of training and deploying models across various services, such as Google Search and Google Ads, ensuring that new models are integrated smoothly into production environments. Automated CI/CD pipelines for ML streamline the deployment process, reduce manual errors, and ensure that models are consistently updated and deployed efficiently.

Model monitoring and management

MLOps involves continuous monitoring of machine learning models in production to track performance, detect drift, and manage updates. This includes monitoring metrics such as accuracy, latency, and resource utilisation. Netflix uses MLOps tools to monitor the performance of recommendation algorithms in real-time. By tracking model performance and user engagement, Netflix can identify and address issues promptly, ensuring that recommendations remain relevant and effective. Ongoing monitoring helps maintain model performance and reliability, ensuring that models continue to meet business objectives and adapt to changing data patterns.

Scalability and infrastructure management

MLOps supports scalable infrastructure management by automating resource provisioning, managing compute resources, and optimising performance. This involves integrating with cloud platforms and managing infrastructure efficiently. A financial services firm like JPMorgan Chase leverages MLOps to manage the deployment of machine learning models across cloud environments. This ensures that models can scale to handle large volumes of financial transactions and market data. Scalable infrastructure management ensures that machine learning models can handle varying workloads and demands, providing reliable performance even as data and usage grow.

Compliance and governance

MLOps includes practices for ensuring that machine learning models comply with regulatory requirements and organisational policies. This involves managing data privacy, security, and model interpretability. In the healthcare sector, organisations like Mayo Clinic use MLOps to ensure that machine learning models used for patient diagnostics comply with HIPAA regulations and maintain data security and privacy. Compliance and governance practices help organisations adhere to legal and ethical standards, ensuring that machine learning models are used responsibly and securely.

Real-world applications of MLOps

Companies like Amazon use MLOps to optimise product recommendations, manage inventory predictions, and enhance customer experiences. Automated pipelines and monitoring ensure that these models are continuously updated and perform well in production.

Financial institutions such as Goldman Sachs apply MLOps to manage credit scoring models, detect fraud, and analyse market trends. MLOps practices help in deploying models that handle large datasets and adapt to changing financial conditions.

Organisations like Pfizer use MLOps to manage predictive models for drug discovery, patient diagnostics, and treatment recommendations. Continuous monitoring and compliance ensure that models are effective and adhere to regulatory standards.

Companies like Lyft implement MLOps to manage models for route optimisation, demand forecasting, and autonomous vehicle systems. MLOps practices ensure that these models are scalable and perform reliably in real-world scenarios.

MLOps is a critical discipline that integrates machine learning with operational best practices to streamline the model lifecycle. By focusing on model development, CI/CD for ML, monitoring, scalability, and compliance, MLOps enhances the efficiency, reliability, and performance of machine learning systems. As machine learning continues to evolve, MLOps provides essential tools and practices for managing complex ML workflows, ensuring that models deliver value and meet business needs effectively.