- Despite being incarcerated, the former Pakistan Prime Minister’s political party uses AI voice cloning for a unique virtual rally, sparking controversy.

- The use of AI-generated voices raises questions about trust in political information, potential misinformation, and the impact on public perception of political events.

- The government’s social media shutdown to control access highlights a delicate balance between restricting information flow and ensuring citizens’ freedom of speech.

AI echoes Imran Khan: Virtual rally triumphs amidst social media clampdown

The former Prime Minister of Pakistan, Imran Khan, currently incarcerated for alleged illegal sale of state gifts since August, has found a unique way to continue his political campaign. Despite his physical absence, Khan’s political party recently released a four-minute video utilizing AI voice cloning technology to replicate his voice. The video, presented during a virtual rally in Pakistan, featured dubbed audio accompanied by a caption indicating, “AI voice of Imran Khan based on his notes.”

According to reports, Khan provided the Pakistan Tehreek-e-Insaf party (PTI) with a shorthand script, subsequently refined by a legal team to capture his distinctive rhetorical style. The resulting script was then transformed into audio using software from ElevenLabs, an AI company specializing in text-to-speech tools and AI voice generation.

Jibran Ilyas, a social media leader for Khan’s party, shared the video on a platform referred to as X. The Guardian highlighted that archival footage from Khan’s past rallies and ElevenLab’s synthetic audio engine were combined to create the video, incorporating both historical clips and stock images.

The virtual rally, lasting five hours, garnered over 500,000 views on YouTube and attracted thousands of viewers on various social media platforms. To control access to the virtual event, the Pakistani government implemented restrictions, temporarily shutting down Facebook, Instagram, X, and YouTube on the day of the rally. According to internet tracking agency NetBlocks, this led to a nationwide disruption of these social platforms in Pakistan on Sunday evening.

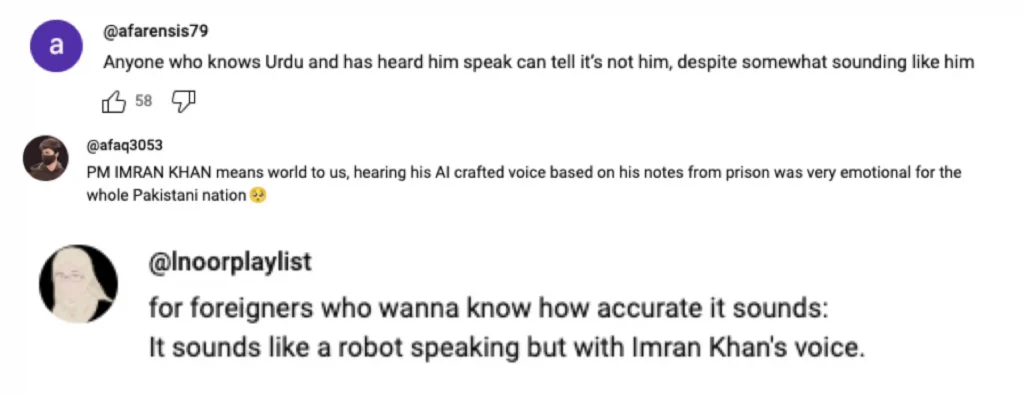

Despite the controversy surrounding the use of AI to simulate Khan’s voice, supporters have expressed praise for the video on social media. The innovative approach allowed the political party to engage with supporters and overcome the challenges posed by Khan’s physical absence from traditional political rallies.

AI in politics: Imran Khan’s virtual rally raises ethical questions on trust, misinformation, and freedom of speech

The use of AI to mimic Imran Khan’s voice in a groundbreaking political presentation has sparked a heated debate, diving into the intricate web of political, technological, and societal ethics. While Imran Khan’s supporters cheer for this innovative approach, a critical concern emerges: does AI’s foray into political communication blur the delicate lines between truth and falsehood, potentially eroding the trust voters place in political information?

This unprecedented utilization of AI-generated voices raises poignant questions about the authenticity of political information. Supporters may hail the innovation, but the broader public could cast doubt on the reliability of political messages. Unchecked skepticism could profoundly impact the cultivation of a healthy political environment.

Beyond immediate concerns, there are additional risks tied to the deployment of AI in political scenarios. Foremost among them is the potential manipulation of public perception. If strategically employed, AI-generated content can warp the image of political figures or events, swaying public opinion with false or exaggerated information and jeopardizing the democratic process.

Furthermore, there is the looming risk of deepening political divides. AI, especially when used for spreading disinformation, possesses the ability to polarize society further, making constructive dialogue and compromise increasingly elusive. This polarization threatens to undermine the unity crucial for a well-functioning democratic system.

The erosion of trust in media emerges as another peril. As AI technology advances in mimicking human voices and visuals, there’s a risk of widespread skepticism toward traditional media sources. Such skepticism can be exploited to further personal agendas, contributing to the overall erosion of trust in the media landscape.

Security risks are significant in this scenario. If left unregulated, AI-generated content, including deepfake videos or voice replicas, could escalate geopolitical tensions, potentially leading to misunderstandings or conflicts between nations. This emphasizes the need for comprehensive regulations to mitigate these risks at national and global levels.

Moreover, the unchecked use of AI in political communication threatens to undermine core democratic values such as transparency and accountability. If voters cannot distinguish between genuine and manipulated information, holding political leaders accountable becomes challenging, posing a potential erosion of the democratic foundation.

Imran Khan’s replicated voice case calls for regulations governing the ethical use of AI in the political sphere. Crafting these regulations demands careful consideration to avoid negatively impacting freedom of speech and political participation while safeguarding against the alarming dangers of manipulation, political divides, erosion of trust, security risks, and the undermining of democratic values.

In conclusion, the implications of deploying AI in political communication extend far beyond concerns of authenticity and misinformation. The risks encompass broader threats to democracy, public trust, and global security, necessitating a comprehensive approach to regulate and ethically manage the use of AI in the political arena.