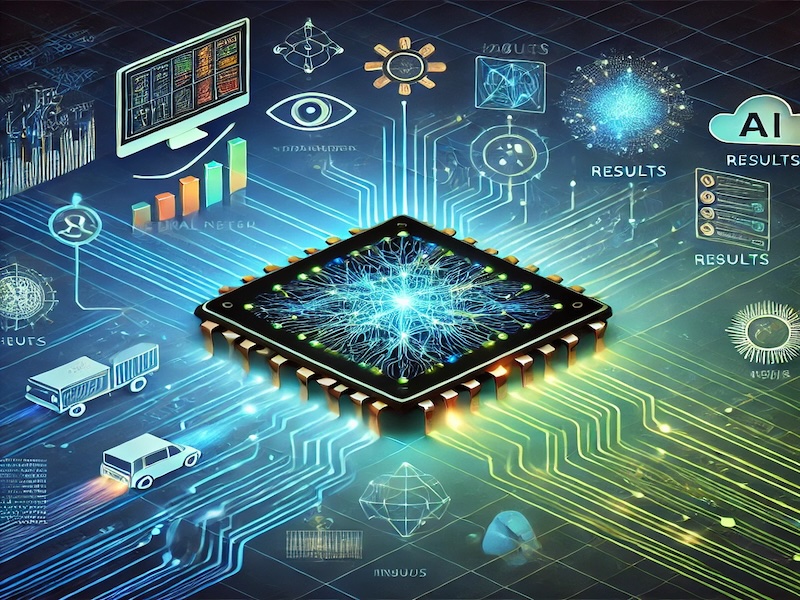

- AI chips are specialized processors designed to accelerate and optimize artificial intelligence tasks, such as machine learning and deep learning, by enabling faster, more efficient processing of large data sets and complex computations.

- Key applications of AI chips include autonomous vehicles, data centers, edge computing, healthcare, and smart cities, with future trends pointing toward smarter chips, integration with quantum computing, and increased use in mobile and IoT devices.

In the world of artificial intelligence (AI), speed, efficiency, and power are crucial factors for success. From self-driving cars to natural language processing models like ChatGPT, AI applications are growing more sophisticated every day. Central to this advancement is a special class of processors known as AI chips. These chips are designed to accelerate and optimize the performance of AI tasks, which can often be extremely computationally intensive. But what exactly are AI chips, and how do they contribute to the rapidly expanding world of AI?

What is an AI chip?

An AI chip is a specialized hardware designed to execute machine learning and deep learning tasks quickly and with low latency. Unlike traditional central processing units (CPUs), which are designed for general computing tasks, AI chips are built to handle the specific needs of artificial intelligence algorithms, such as training neural networks, processing large amounts of data, and making predictions.

These chips are capable of performing complex computations much faster and more efficiently than general-purpose processors, making them an essential component in modern AI systems. AI chips commonly appear in data centers, edge devices, autonomous vehicles, and robotics, where real-time processing of large data sets is critical.

Also read: U.S. imposes new export restrictions on TSMC’s AI chips

How do AI chips work?

At the core of AI chip technology is parallel processing. Traditional CPUs operate by executing tasks sequentially—one operation at a time—while AI chips are optimized for handling multiple tasks simultaneously. This makes AI chips ideal for the large-scale, data-intensive operations that are common in AI.

Types of AI Chips:

There are several types of AI chips, each designed to meet different needs within the AI ecosystem. The most common types include:

- Graphics Processing Units (GPUs): GPUs are one of the most well-known types of AI chips. Initially developed for rendering graphics in video games, GPUs are particularly well-suited for machine learning and deep learning tasks. They excel at performing matrix multiplications and handling parallel tasks, which are central to neural network processing.

- Tensor Processing Units (TPUs): Developed by Google, TPUs are custom-designed chips optimized for the matrix operations used in deep learning. TPUs are designed specifically for AI workloads, offering both high computational power and energy efficiency. Google uses TPUs extensively in its data centers to power services like Google Translate and Google Photos.

- Application-Specific Integrated Circuits (ASICs): ASICs are chips designed for a specific application, such as AI tasks. These chips can outperform general-purpose processors in terms of both speed and efficiency, but they lack the versatility of GPUs and CPUs. They are particularly useful for high-performance, low-latency applications, such as those in autonomous vehicles or robotics.

- Field-Programmable Gate Arrays (FPGAs): FPGAs are reconfigurable chips that can be programmed to perform specific tasks. Unlike ASICs, which are hardwired for a specific function, FPGAs can be programmed and reprogrammed as needed. This flexibility makes them ideal for some AI applications, especially when it’s necessary to change the chip’s functionality quickly.

Also read: Oracle boosts AI cloud with Nvidia GPUs

Why are AI chips important?

The importance of AI chips becomes clear when considering the immense computational demands of AI. Machine learning, particularly deep learning, involves processing vast datasets through complex models with millions, or even billions, of parameters. Training these models requires significant computational power, making it both time-consuming and expensive. Traditional processors like CPUs struggle to keep up with these demands due to their sequential processing nature. AI chips, designed for parallel processing, are optimized to handle these intensive workloads efficiently. They can perform massive numbers of calculations simultaneously, dramatically speeding up model training and inference, and making AI advancements more feasible and scalable.

AI chips are designed to meet these needs by providing the following advantages:

- Speed: AI chips can process data at much higher speeds compared to traditional CPUs. This speed is crucial for applications such as real-time decision-making in autonomous systems, video processing, or even gaming.

- Energy Efficiency: AI tasks consume massive amounts of power. AI chips are optimized for energy efficiency, ensuring that the systems they power don’t overheat or waste resources.

- Parallelism: AI models require many simultaneous computations, something that GPUs and other specialized AI chips excel at. This parallel processing allows AI systems to scale and perform tasks that would otherwise take too long to complete.

- Scalability: AI chips can handle increasingly larger datasets and more complex models. As AI continues to evolve, the need for more powerful and efficient hardware will grow, and AI chips are poised to meet this demand.

Also read: Nvidia CEO pinpoint the focus of advanced AI chip development with SK hynix

With artificial intelligence, we are summoning the demon. You know those stories where there’s the guy with the pentagram and the holy water, and he’s like, ‘Yeah, he’s sure he can control the demon’? Doesn’t work out.

Elon Musk, CEO of Tesla and SpaceX

Applications of AI chips

AI chips have become an integral part of numerous industries. Some of the key areas where AI chips are making a significant impact include:

- Autonomous Vehicles: Autonomous vehicles require real-time processing of large amounts of sensor data (such as from cameras, radar, and LIDAR) to navigate roads safely. AI chips are used to process this data quickly and efficiently, enabling the vehicle to make instant decisions based on its surroundings.

- Data Centers: AI chips are extensively used in data centers, where they help process large datasets for cloud computing, machine learning, and AI applications. Google, Amazon, Microsoft, and other tech giants have invested heavily in AI chip development to meet the growing demand for cloud-based AI services.

- Edge Computing: As AI applications become more distributed, edge devices—such as smartphones, smart cameras, and IoT devices—are increasingly relying on AI chips. These chips enable devices to process AI models locally, reducing the need to send data back to the cloud and improving latency.

- Healthcare: AI chips are being used in healthcare to power medical imaging systems, diagnostic tools, and drug discovery. They help in analyzing vast amounts of medical data, which can aid in quicker diagnosis and more personalized treatments.

- Smart Cities: AI chips also play a role in the development of smart cities, where they are used to power everything from traffic management systems to smart energy grids, making urban living more efficient and sustainable.

Also read: Rebellions plans 2025 IPO as AI chip demand soars

The future of AI chips

As AI continues to evolve, the demand for more powerful and specialized chips will only grow. Here are a few trends we can expect to see in the future of AI chip development:

- Integration of AI Chips with Cloud Infrastructure: Cloud services like AWS, Google Cloud, and Azure are incorporating AI chips into their infrastructure. This will allow businesses and developers to access powerful AI tools without needing to invest in expensive hardware.

- Smarter AI Chips: In the future, AI chips may themselves be able to optimize their processing power based on the task at hand. This would involve integrating AI into the chips themselves, allowing for smarter decision-making and resource allocation.

- Quantum Computing and AI Chips: While still in its early stages, quantum computing has the potential to revolutionize AI. Researchers are exploring how quantum computers could complement traditional AI chips to solve problems that are currently beyond the reach of classical computing.

- AI Chips for Edge Devices: As edge computing continues to grow, there will be a greater need for AI chips that are not only powerful but also compact and energy-efficient. This will drive innovation in low-power AI chips for mobile devices and IoT applications.

Also read: OpenAI talks new AI chip with Broadcom

Challenges in AI chip development

While AI chips hold tremendous potential, their development also comes with challenges. Some of these include:

- Cost of Production: Developing specialized AI chips is an expensive process, and it requires significant investment in research and development. As the demand for AI chips grows, manufacturers will need to balance innovation with cost efficiency.

- Compatibility and Integration: AI chips must be compatible with existing software frameworks and hardware. As AI technology evolves, ensuring that new chips integrate seamlessly with legacy systems is a key challenge for developers.

- Data Privacy and Security: With AI chips being used to process large amounts of sensitive data, ensuring data privacy and security becomes even more critical. Manufacturers need to focus on creating secure AI chips that protect user data.

AI is one of the most profound things we’re working on as humanity. It’s more profound than fire or electricity.

Sundar Pichai, CEO of Google

AI Chips: Powering the Future of Artificial Intelligence

AI chips are crucial in the development and deployment of artificial intelligence (AI) technologies. These specialized processors are designed to efficiently handle the complex computational tasks required by machine learning and deep learning models. By providing faster processing speeds and lower latency, AI chips enable advancements in various fields, including autonomous driving, healthcare, edge computing, and robotics. As AI applications continue to grow, the demand for more powerful, energy-efficient AI chips will only increase.

In autonomous vehicles, AI chips process real-time data from cameras, sensors, and radar, enabling safer navigation and decision-making. In healthcare, AI chips support diagnostic tools and predictive algorithms, helping doctors detect diseases earlier and personalize treatments. For edge computing, AI chips process data locally, minimizing latency and reducing reliance on cloud systems, which is critical for applications in smart homes, industrial automation, and Internet of Things (IoT) devices.

The future of AI chips looks promising, with continued innovation in areas like quantum computing, edge devices, and cloud infrastructure. Quantum computing has the potential to revolutionize AI by significantly speeding up data processing, allowing for more complex models and faster decision-making. Meanwhile, edge AI devices will continue to enable faster, localized processing for real-time applications, reducing the need for continuous cloud connectivity.

However, challenges such as high costs, system integration, and security must be addressed for AI chips to reach their full potential. Ensuring cost-effective manufacturing, seamless integration with existing infrastructure, and robust security measures will be crucial for widespread adoption. Ultimately, AI chips will remain the backbone of AI’s evolution, powering innovations in everything from autonomous systems to smart cities, and shaping the future of technology.

FAQ: What are AI chips?

An AI chip is a specialized processor designed for artificial intelligence tasks like machine learning and deep learning. Unlike traditional CPUs, which are general-purpose and execute tasks sequentially, AI chips are optimized for parallel processing, enabling them to handle large-scale data computations more efficiently.

The main types of AI chips include:

1. GPUs – Ideal for parallel tasks like training neural networks.

2. TPUs – Optimized for deep learning operations, developed by Google.

3. ASICs – Custom-designed for specific AI tasks, offering high speed and low latency.

4. FPGAs – Reconfigurable chips, useful for applications requiring flexibility.

AI chips provide speed, energy efficiency, parallelism, and scalability necessary for handling complex AI workloads. They enable real-time processing, making them vital for applications like autonomous vehicles, edge computing, and data centers.

AI chips are transforming industries such as:

1. Autonomous vehicles: For real-time decision-making using sensor data.

2. Healthcare: Enhancing medical imaging and diagnostics.

3. Edge computing: Enabling local AI processing on devices like IoT gadgets.

4. Smart cities: Powering traffic systems and energy grids.

Future AI chip development will focus on enhanced performance, energy efficiency, and security. Key trends include integration with quantum computing, the rise of edge AI chips for real-time processing, customized designs for specific tasks, and advances in neuromorphic computing. These innovations will drive AI’s evolution across industries, powering smarter, faster technologies.