- The proliferation of persuasive yet misleading content necessitates a vigilant approach to information consumption as AI technology continues to advance.

- By employing critical thinking skills and utilising reliable resources, individuals can effectively navigate the complexities of digital information and minimise the risk of falling prey to misinformation.

OUR TAKE

It is imperative for individuals to be proactive in discerning fact from fiction in this era defined by rapid technological advancements, particularly when faced with AI-generated misinformation. The responsibility lies not only with tech developers and media organisations but also with each of us as consumers of information. Building a culture of skepticism, promoting media literacy, and fostering critical analysis are essential steps toward safeguarding ourselves and society against the potential dangers posed by deceptive narratives.

—Lily,Yang, BTW reporter

The digital landscape has transformed the way we access and consume information, bringing both unprecedented opportunities and significant challenges. Among these challenges is the rise of AI-generated misinformation—a phenomenon that leverages sophisticated algorithms to create content that appears credible but is fundamentally false.

Individuals must develop the skills necessary to navigate this complex environment as this technology becomes more prevalent. Understanding the tactics used by AI to produce misleading content and employing strategies for verification are crucial in protecting oneself from deceit. This feature explores practical approaches to help individuals critically assess information and make informed decisions in an age where truth can often be obscured by digital deception.

People come to look up things they’ve encountered on the internet and find out whether they are true or not.

David Mikkelson, the co-founder of Snopes.

Understanding AI-generated misinformation

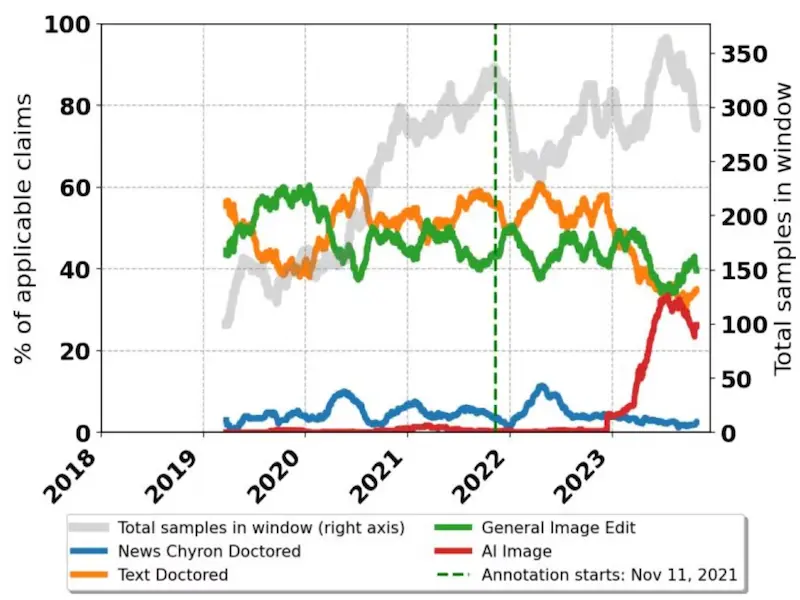

Misinformation refers to false or misleading information shared regardless of intent. With AI systems capable of generating text, images, and videos, the potential for creating plausible yet incorrect content has risen exponentially. These AI models, trained on vast amounts of data, can produce articles, essays, and posts that closely resemble genuine human communication. Thus, distinguishing between real information and deceptive narratives can be challenging.

Also read: OpenAI fights misinformation with tech collaboration

The psychology behind AI-generated misinformation

The digital age has brought with it an overwhelming amount of available information. This overload can lead to cognitive fatigue, making it difficult for individuals to discern between reliable and unreliable sources. The phenomenon of social proof suggests that if many people accept a piece of information, individuals are likely to follow suit, believing it to be true. AI-generated misinformation that gains traction on social media platforms can lead to herd behavior, which can exacerbate its reach and influence.

Because humans are inherently social animals, they often rely on others for cues about what to believe and how to behave. In this context, relying on AI to filter or sort information can inadvertently lead to consuming misinformation if individuals do not engage critically with the content.

Also, many people are increasingly trusting AI and automated systems as technology develops. This trust may cause users to overlook potential flaws or biases inherent in AI-generated content. When people believe that AI is authoritative or objective, they may overlook the possibility that information generated by these systems may be misleading or false.

The psychology behind AI-generated misinformation is multifaceted, encompassing cognitive biases, emotional responses, social dynamics, and the influence of technology. Understanding these psychological mechanisms is essential for developing effective strategies to combat misinformation. By fostering awareness of these factors, educators, policymakers, and tech developers can work to enhance critical thinking, promote media literacy, and cultivate a more discerning public capable of navigating the complexities of the digital information landscape.

Strategies for identifying misinformation

Natural language processing

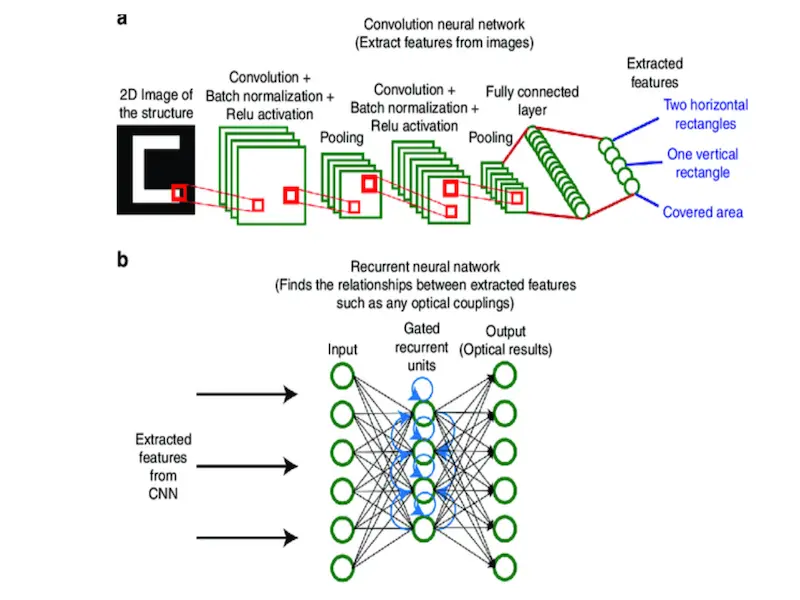

Natural language processing is a branch of AI that focuses on the interaction between computers and human language. NLP algorithms analyse text to discern its origin, structure, and semantics. By employing linguistic features, these algorithms can often identify patterns commonly found in AI-generated texts, such as repetitiveness or unnatural phrasing.

For example, tools using NLP can score the likelihood that a piece of text was generated by an AI model based on its syntactic structures and vocabulary usage. This technology is vital for organisations striving to filter out potentially misleading information from genuine discourse.

Deep learning models

Deep learning models are at the forefront of AI content generation, utilised by systems like OpenAI‘s GPT. Conversely, other deep learning models are designed to detect AI-generated content by analysing features distinctive to machine-written text. These models often factor in stylistic elements, coherence, and complexity to ascertain whether the writing is more aligned with human authorship or AI generation.

Digital forensics tools

Digital forensics tools focus on the authenticity of multimedia content, which includes images and videos generated or altered by AI. Such tools employ techniques like reverse image search, metadata analysis, and anomaly detection to identify manipulated media. Companies like FotoForensics provide services to help users assess the integrity of images by highlighting alterations that may indicate AI intervention.

AI detection platforms

Several companies have emerged to specifically tackle the problem of identifying AI-generated content. Here are a few notable ones.

- OpenAI: In addition to developing AI language models, OpenAI is also researching ways to flag AI-generated content through watermarking techniques and metadata tagging. Their efforts aim to ensure transparency and accountability in AI use.

- Hugging Face: Known for its collaborative approach to AI and NLP, Hugging Face provides tools that can help developers create models capable of detecting AI-generated content, encouraging the creation of ethical AI applications.

- Sensity AI: This company specialises in detecting deepfakes and synthetic media. By leveraging computer vision and machine learning technologies, Sensity offers solutions to identify altered content across various platforms, contributing to the fight against misinformation.

- Giant Language Model Test Room (GLTR): Developed by researchers from MIT-IBM Watson AI Lab and Harvard NLP, GLTR analyses text to determine the likelihood it was generated by AI. By examining statistical patterns within the text, GLTR provides users with insights into the authenticity of written content.

Crowdsourced fact-checking

Another effective approach involves leveraging the power of community and technology. Platforms like Snopes, FactCheck.org, or PolitiFact enlist users to report and verify claims, combining human intuition with algorithmic support to assess the credibility of information. Such collaborations can enhance the detection of AI-generated misinformation, capitalising on collective knowledge and expertise.

Our goal is to apply the best practices of both journalism and scholarship, and to increase public knowledge and understanding.

FactCheck.org.

Pop Quiz

What is misinformation?

A. Information that is always intentional.

B. False or misleading information shared regardless of intent.

C. Only news that is published by official agencies.

D. Any opinion that differs from yours.

The correct answer is at the bottom of the article.

The consequences of AI-generated misinformation

AI tools can generate vast amounts of misleading content quickly and convincingly, making it easier for false information to spread across social media platforms and websites. This accelerated dissemination amplifies the potential reach and impact of misinformation, often outpacing efforts to debunk it.

As AI-generated misinformation becomes more prevalent, public trust in traditional media sources, governmental institutions, and scientific organisations may diminish. When individuals cannot easily distinguish between credible information and AI-generated fabrications, they may become skeptical of all information sources, leading to a generalised distrust.

During health crises, such as pandemics, AI-generated misinformation about treatments, vaccines, and preventive measures can undermine public health initiatives. When individuals encounter misleading claims, they may be less likely to follow expert guidance, resulting in poorer health outcomes for themselves and their communities.

AI-generated misinformation can damage businesses and industries by spreading false narratives about products, services, or financial stability. Misleading information can lead to stock market manipulation or consumer panic, ultimately affecting the broader economy.

The rise of AI-generated misinformation raises complex legal and ethical questions regarding accountability and responsibility. Determining who is liable for the dissemination of harmful content—whether it be the developers of AI systems, platform providers, or end-users—poses significant challenges for regulatory frameworks.

Also read: Google launches image verification, anti-misinformation tools

The correct answer is B, false or misleading information shared regardless of intent.